I purchased a new Apple Mac Mini recently and didn’t want to fall victim to Apple’s “RAM Tax”.

I used Apple’s site to configure a Mac Mini with a quad core processor, 32GB RAM, and a 512GB SSD.

I was shocked to see they added £600.00 to the price of a base model with 8GB RAM. They’re effectively charging all of this money for 24GB of extra RAM. This memory is nothing special, it’s pretty standard 2666MHz DDR4 SODIMM modules. The same stuff that is used in generic laptops.

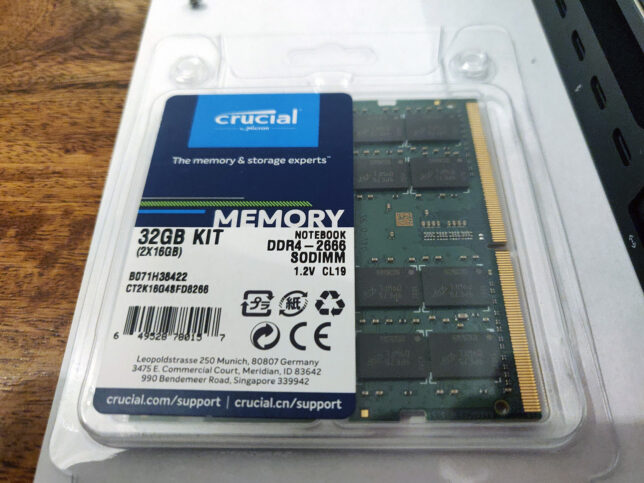

I decided to cut back my order to the base model with 8GB of RAM. I ordered a Crucial 32GB Kit (2 x 16GB DDR4-2666 SODIMM modules running at 1.2 volts with a CAS latency of 19ns). This kit cost me just over £100.00 online.

In total I saved around £500.00 for the trouble of about 30 minutes of work to open up the Mac Mini and replace the RAM modules myself.

The Teardown Process

Use the iFixit Guide

You can use my photos and brief explanations below if you would like to follow the steps I took to replace the RAM, but honestly, you’re better off following iFixit’s excellent guide here.

Follow along Here

If you want to compare or follow along in my format, then read on…

Get a good tool kit with hex screw drivers. I used iFixit’s basic kit.

Flip the Mac Mini upside down.

Pry open the back cover, carefully with a plastic prying tool

Undo the 6 x hex screws on the metal plate under the black plastic cover. Be careful to remember the positions of these, as there are 2 x different types. 3 x short screws, and 3 x longer.

Very carefully, move the cover to the side, revealing the WiFi antenna connector. Unscrew the small hex screw holding the metal tab on the cable. Use a plastic levering tool to carefully pop the antenna connector off.

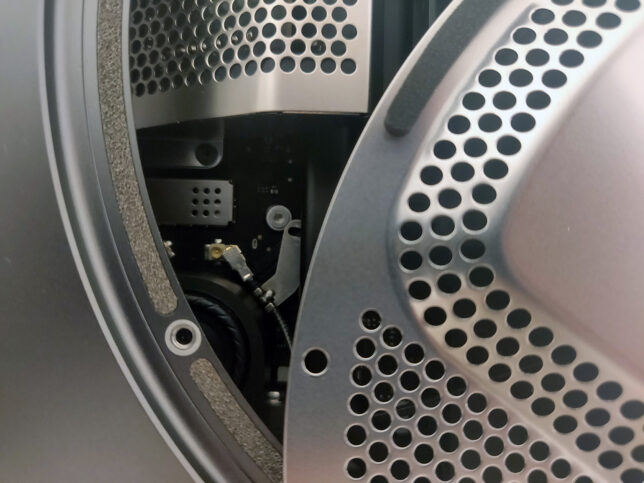

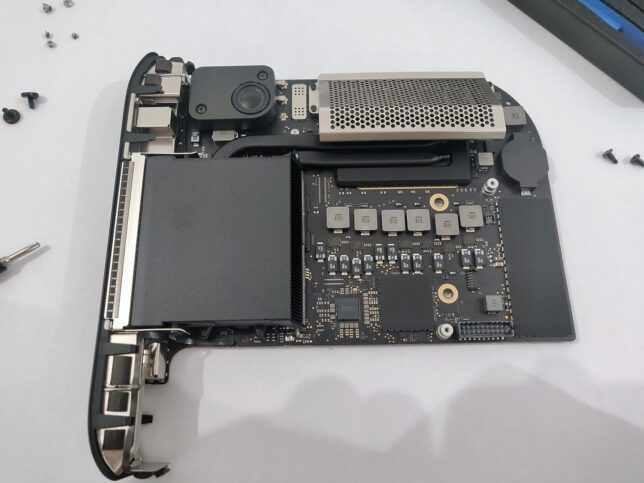

Next, unscrew 4 x screws that hold the blower fan to the exhaust port. You can see one of the screws in the photo below. Two of the screws are angled at a 45 degree orientation, so carefully undo those, and use tweezers to catch them as they come out.

Carefully lift the blower fan up, and disconnect it’s cable using a plastic pick or prying tool. The trick is to lift from underneat the back of the cable’s connector and it’ll pop off.

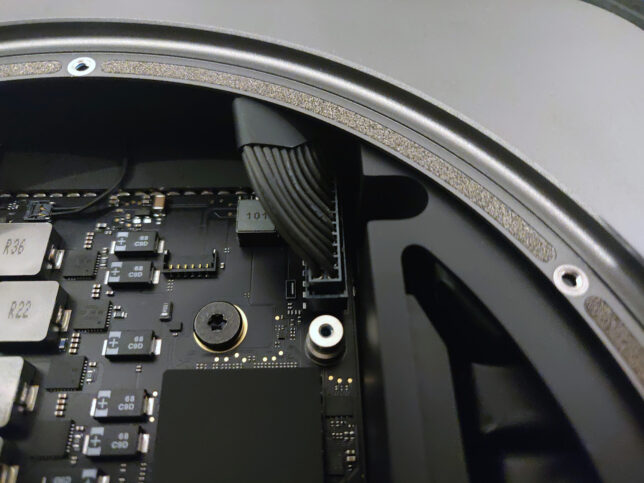

Next, disconnect the main power cable at the top right of the photo below. This requires a little bit of wiggling to loosen and lift it as evenly as possible.

Now disconnect the LED cable (two pin). It’s very delicate, so do this as carefully as possible.

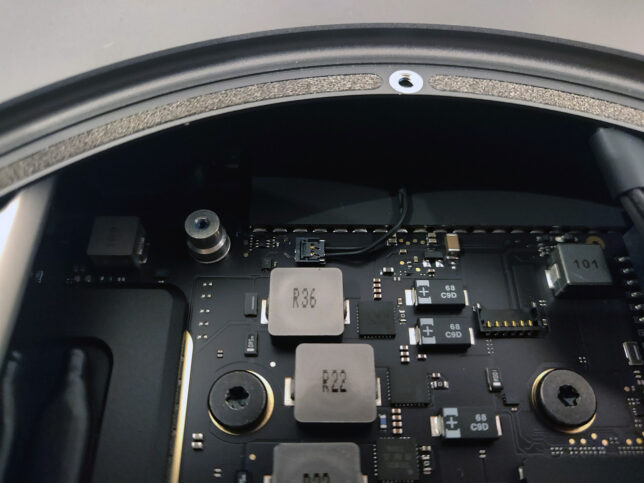

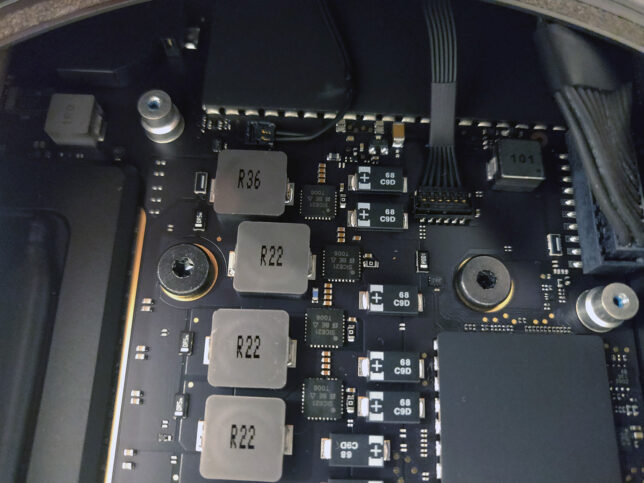

There are two main hex screws to remove from the motherboard central area now. You can see them removed below near the middle (where the brass/gold coloured rings are).

With everything disconnected, carefully push the inner motherboard and it’s tray out, using your thumbs on the fan’s exhaust port. You should ideally position your thumbs on the screw hole areas of the fan exhaust port. It’ll pop out, then just very carefully push it all the way out.

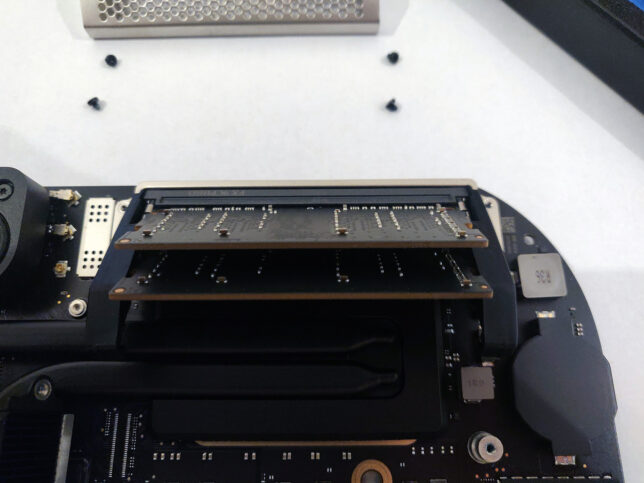

The RAM area is protected by a metal ‘cage’. Unscrew it’s 4 x hex screws and slowly lift the cage off the RAM retainer clips.

Carefully push the RAM module retainer clips to the side (they have a rubber grommet type covering over them), and the existing SODIMM modules will pop loose.

Remove the old modules and replace with your new ones. Make sure you align the modules in the correct orientation. The slots are keyed, so pay attention to that. Push them down toward the board once aligned and the retainer clips will snap shut and lock them in place.

Replace the RAM ‘cage’ with it’s 4 x hex screws.

Reverse the steps you took above to insert the motherboard tray back into the chassis and re-attach all the cables and connectors in the correct order.

Make sure you didn’t miss any screws or cables when reconnecting everything.

Finally boot up and enjoy your cheap RAM upgrade.